AI Made Easy: Your Guide to Fast and Reliable AI/ML Workload Deployment

Companies are investing heavily in Artificial Intelligence and Machine Learning (AI/ML) to improve their products, accelerate their processes, and get an edge on their competitors. However, the AI landscape of software, tools, and techniques is growing larger by the hour. Fast and safe provisioning of these environments throughout their lifecycle could be the key to making it all work. Let’s take a look at how you can build a platform to get ahead in the AI/ML race.

The AI Lifecycle

The AI landscape is gigantic. The industry has overloaded all of these tools, terms, services, and approaches and they sometimes get mixed together. It can be extremely confusing. So, I should probably start by defining what the stages of a typical rollout of AI/ML workloads looks like.

The process of building AI to support a function in your application or business has three stages.

- The learning stage: This is where you gather the data that is relevant to the information you want to process.

- The modeling stage: In this stage, you need to use the data you gathered in order to train a model to be able to predict patterns and answer questions about the data.

- The deployment stage: Without implementing your model in some way you can’t use the AI to do anything. So, you might deploy your model as a chat bot like chatGPT for example, an image generator, or voice response tool.

Each of these stages can be further broken down into smaller more specific milestones in the process.

However, you don’t just do this once. You need to continuously improve the quality of the data you are gathering, adjust your model or try new ones, and even perhaps deploy in a different way or in different places depending on how your AI needs to be accessed and used. This creates a lifecycle that will go on indefinitely (or until your AI model takes over the world 😀) and it requires managing the infrastructure and services at each stage and iteration for multiple developers and ML engineers.

The Challenges With AI/ML Workload Deployments

This lifecycle and the pace of change in the AI landscape presents multiple challenges when it comes to trying to manage and maintain all the required infrastructure for AI/ML workloads. Here are just a few examples:

Maintaining infrastructure consistency

AI platforms require deployment across multiple environments (development, staging, production) and multiple cloud providers. Ensuring that all these environments are consistent in terms of configuration and infrastructure can be a daunting task.

Resource allocation and management

AI workloads, especially training deep learning models, require specific resources like GPU instances. Efficiently allocating and managing such resources without wastage can be a unique and complex challenge.

Complex dependencies and pipelines

AI platforms might rely on a mesh of services, databases, and other infrastructure components. Managing dependencies, connectivity, and ensuring that services are set up in the correct order are critical for reliability.

Security and compliance

Ensuring that all infrastructure components of an AI platform adhere to security best practices and compliance standards can be a significant concern. This is especially true when teams go rogue and try to do things themselves.

Portability across cloud providers

Vendor lock-in is a concern for many organizations. Building an AI platform on one cloud provider and then moving it to another can be a massive undertaking.

Scaling infrastructure

AI platforms often face variable workloads with real-time processing and large datasets. Infrastructure needs can spike during intensive tasks, like model training, and reduce during idle times. Manually provisioning resources doesn’t scale and relying on scripts can lead to configuration drift.

Building an AI/ML platform

There is no one-size-fits-all solution for AI/ML infrastructure. Every business will need a unique AI/ML infrastructure stack that fits its needs. So, what’s needed here is a platform that can help with all of these concerns and make it significantly easier for a platform team to keep up with the relentless demand of requests for AI infrastructure.

The ideal AI/ML platform should provide:

- Custom APIs that developers can use to deploy resources

- Declarative configurations that can enforce consistency

- A provider agnostic approach to ensure portability

- An ecosystem of integrations for the most common services

- An easy way to integrate new and custom services

- Automatic reconciliation of changes to keep everything up to date

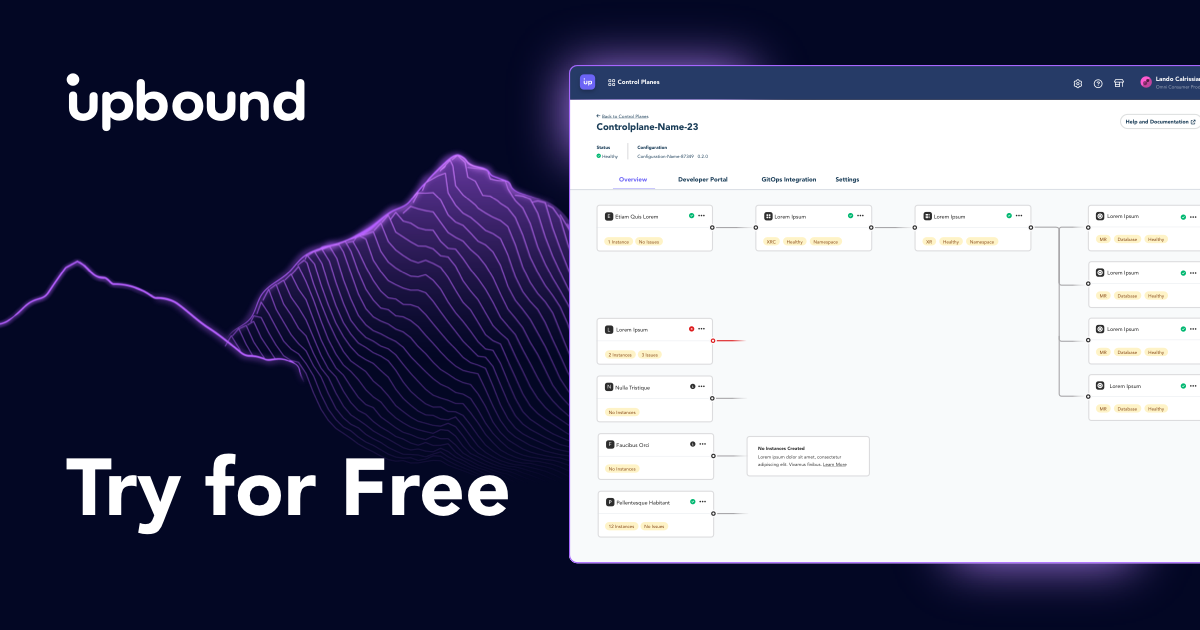

Upbound uses managed control planes to provide a platform that offers easy, consistent deployment of AI/ML infrastructure and services for developers and ML engineers. Upbound addresses each requirement listed above so you don’t have to worry about failed deployments, compliance, or security concerns. By standardizing on a flexible platform with API abstractions there is no need for shadow IT to creep into your environment.

From data collection and preparation to model deployment and retraining, Upbound can help at all stages of your AI/ML journey. With Upbound, you can not only deploy representative and reproducible infrastructure at the beginning of your project but also new services and applications can be added as needed. All this while enabling organizations to balance performance, cost, and developer experience levels when constructing the right infrastructure stack.

To illustrate how it all works we have created an example configuration in the Upbound marketplace that you can use with Upbound or Crossplane to deploy your own AI environment using Stable Diffusion. This AI application will learn about images of dogs and will be able to produce a reasonable dog portrait on demand. By following the guide in the configuration readme you will learn how to use a control plane to automatically:

- Configure a VPC with subnets and gateways

- Deploy a Kubernetes cluster using AWS EKS

- Configure a load balancer

- Configure the node groups for core components and GPU processing

- Install the NVIDIA Device Plugin

- Configure JupyterHub to facilitate machine learning

- Setup Ingress Nginx

- Install and configure Flux to automate the deployment and continuous synchronization

- Deploy the Kuberay-Operator for scaling

Phew! That is a lot! It is also exactly the reason we need a cloud-native platform like Upbound to help out. It would be difficult enough to do these tasks by yourself one time. Imagine trying to do all this hundreds of times a week!

To prove that this all works as advertised, here is one of the pictures that was produced by using this configuration to drastically simplify the process of setting up AI/ML infrastructure on demand. Try it for yourself and share your favorite AI doggie with the world.

What's Next?

To get started using Ubound as your cloud-native platform for deploying and managing AI/ML workloads, sign up for a free 30-day trial and try out the AI/ML configuration (also in the Upbound Marketplace). Be sure to let us know if you have any questions and enjoy all the free doggy portraits!